Top Cybersecurity Concerns Related to GenAI Are Escalating Faster Than Controls

Share

Cybersecurity concerns related to GenAI are no longer emerging risks. They are already shaping enterprise security strategies, national preparedness, and boardroom decisions. As Generative AI (GenAI) moves rapidly from experimentation into everyday operations, organizations are discovering that security frameworks are not evolving at the same pace.

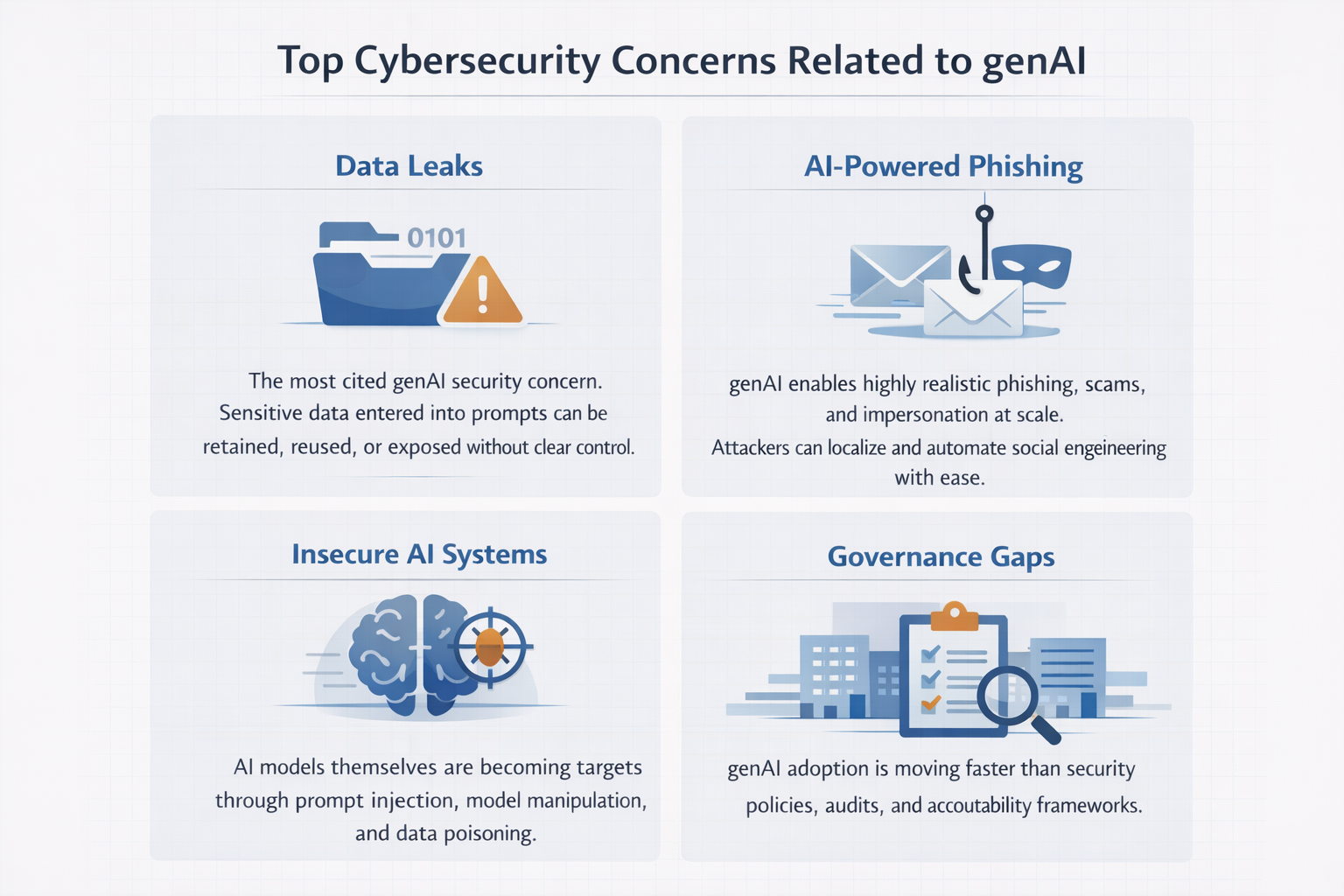

The challenge is not adoption. The challenge is control. According to the Global Cybersecurity Outlook 2026 by the World Economic Forum, GenAI is now one of the strongest forces reshaping cyber risk across industries, geographies, and supply chains.

Key points at a glance

- Data leaks are the most cited cybersecurity concerns related to GenAI

- GenAI is amplifying attacker speed and scale

- Governance and accountability lag behind deployment

- AI systems and agents are becoming new attack surfaces

- Skills gaps and uneven maturity increase ecosystem risk

Data Leaks Are the Most Immediate GenAI Risk

Data Leaks Are the Most Immediate GenAI Risk

Among all cybersecurity concerns related to GenAI, data exposure ranks highest. The report shows that data leaks are the top GenAI-related concern for CEOs, reflecting growing anxiety about how sensitive information is handled inside AI systems.

GenAI tools often retain context. Employees may paste confidential data into prompts without realizing it could be stored, reused, or exposed through model behavior. Once sensitive data enters a GenAI workflow, ownership and traceability become unclear.

This risk increases when organizations rely on third-party GenAI platforms without strict data governance or contractual protections.

GenAI Is Strengthening Attackers Faster Than Defenders

Another major driver of cybersecurity concerns related to GenAI is the rapid adoption of these tools by threat actors. The report notes that 29% of leaders are most concerned about the advancement of adversarial capabilities enabled by AI.

Attackers use GenAI to automate phishing, generate convincing deepfakes, localize scams, and scale social engineering. These attacks are faster, cheaper, and harder to detect. Meanwhile, defenders remain bound by compliance, review cycles, and governance constraints.

GenAI Is Deepening the Gap Between Attackers and Defenders

The report highlights a structural imbalance. GenAI allows attackers to move quickly and adapt instantly. Defenders must validate tools, document risks, and secure approvals before deployment.

This asymmetry means even mature security teams struggle to keep up. Cybersecurity concerns related to GenAI are not just technical issues. They are organizational and systemic challenges that require new defensive models.

Over-Automation Is Creating New Security Blind Spots

AI-driven security tools improve detection and response, but over-automation introduces risk. One of the quieter cybersecurity concerns related to GenAI is excessive trust in automated decisions without human oversight.

False positives, missed context, and unchallenged AI outputs can accumulate silently. GenAI does not remove the need for judgment. It shifts where judgment is required. The report cautions that human validation remains critical.

AI Systems Themselves Are Becoming Attack Surfaces

Cybersecurity concerns related to GenAI extend beyond misuse. The AI systems themselves are now targets. Prompt injection, model manipulation, training data poisoning, and abuse of AI agents are emerging attack techniques. This reflects broader trends where cyber-enabled fraud has overtaken ransomware as a top global risk, affecting 73% of organizations and highlighting how AI-powered attacks are reshaping the threat landscape.

As GenAI integrates deeper into business systems, a single compromised model can affect multiple workflows at once. This creates cascading risk rather than isolated incidents.

GenAI Is Expanding Identity and Access Risks

AI agents and copilots introduce a rapid growth of machine identities. These systems require credentials, permissions, and access to sensitive data.

The report highlights that without zero-trust principles applied to AI agents, GenAI systems can quietly accumulate excessive privileges. Managing machine identities is becoming as critical as managing human users, adding another layer to cybersecurity concerns related to GenAI.

Uneven AI Security Maturity Increases Ecosystem Risk

Highly resilient organizations are far more likely to assess GenAI security and review it continuously. Less resilient organizations often deploy tools without formal evaluation.

This uneven maturity increases systemic risk. Attackers increasingly target weaker organizations using insecure GenAI tools to gain indirect access to larger networks. Cybersecurity concerns related to GenAI therefore extend beyond individual enterprises.

GenAI Introduces Hidden Supply Chain Exposure

Supply chain risk is becoming harder to detect. Vendors may use GenAI internally with weak controls, exposing shared data or systems without any direct breach.

This inheritance risk means strong internal security is not enough. A single supplier misusing GenAI can expose multiple downstream partners.

Skills Shortages Are Amplifying GenAI Security Risks

The report shows that more than half of organizations cite lack of skills and expertise as a barrier to securing AI systems. This affects AI risk assessment, governance, and incident response.

Without focused upskilling, GenAI adoption will continue to outpace security readiness, intensifying cybersecurity concerns related to GenAI.

Falling Confidence in National Cyber Preparedness

At the national level, confidence is declining. 31% of leaders report low confidence in their country’s ability to respond to major cyber incidents. As GenAI enables automated, cross-border attacks, weak preparedness becomes a major risk multiplier.

Cybersecurity concerns related to GenAI now intersect with critical infrastructure and public safety, not just enterprise IT.

To Sum Up

GenAI is not optional, but unmanaged GenAI is risky. Organizations that treat it like conventional software underestimate its impact on data, identity, and trust. Addressing cybersecurity concerns related to GenAI requires governance, visibility, human oversight, and continuous assessment. Without these, innovation will continue to outpace security.

FAQs

What are the biggest cybersecurity concerns related to GenAI?

Data leaks, AI-powered phishing, governance gaps, insecure AI systems, and identity risks.

Why are data leaks a major GenAI issue?

Because GenAI systems retain context, making it difficult to control sensitive inputs and outputs.

How are attackers using GenAI today?

To automate phishing, generate deepfakes, localize scams, and scale social engineering.

Are organizations assessing GenAI security before deployment?

More are doing so, but many still lack formal or continuous assessment processes.

Can GenAI improve cybersecurity?

Yes, but only when deployed with strong governance and human oversight.